8 Data management best practices You Should Know

In the modern professional environment, effective data handling is not just an IT concern; it's a fundamental pillar of productivity, security, and strategic advantage. For corporate professionals and content creators, particularly within the Mac ecosystem, mastering data management can mean the difference between seamless workflows and chaotic, inefficient operations. The sheer volume of files, from critical business documents and project assets to high-resolution creative content, demands a structured approach. Without one, you risk data loss, security breaches, and countless hours wasted searching for misplaced information.

This article provides a comprehensive roundup of actionable data management best practices designed to address these challenges head-on. We will move beyond generic advice to deliver specific, practical strategies you can implement immediately. You will learn how to build a robust framework for handling your digital assets, ensuring everything from initial creation to long-term archival is managed with precision and care.

Our goal is to equip you with the knowledge to optimize your entire data workflow. We will explore essential topics including:

- Establishing a clear Data Governance Framework.

- Implementing Master Data Management (MDM) for consistency.

- Ensuring high standards of Data Quality.

- Strengthening Data Security and protecting privacy.

- Managing the complete Data Lifecycle.

- Streamlining Data Integration and Interoperability.

- Executing reliable Data Backup and Disaster Recovery plans.

- Maintaining thorough Data Documentation and Metadata.

By adopting these practices, you can transform your data from a liability into a well-organized, secure, and accessible asset that powers your professional success. Let's dive into the core principles that will redefine how you manage your most valuable information.

1. Data Governance Framework

A Data Governance Framework is not just a single practice; it is the foundational blueprint that underpins all other data management best practices. It establishes a formal structure of policies, rules, standards, and processes for managing an organization's data assets. This framework ensures that data is handled consistently and securely throughout its entire lifecycle, from creation to archival or deletion. It clarifies who can take what action, upon what data, in what situations, and using what methods.

For creative professionals and corporate teams on Mac, this might seem abstract, but it's profoundly practical. It means knowing exactly where final project files are stored, who has the authority to approve changes, and what the protocol is for sharing sensitive client data. A robust governance framework transforms data from a chaotic liability into a strategic asset, driving better decision-making and operational efficiency.

Why It's a Top Practice

Implementing a Data Governance Framework is crucial because it provides the structure needed for data to be trustworthy, secure, and valuable. Without it, even the most sophisticated tools and talented teams can fall victim to data silos, inconsistencies, and security breaches. It's the difference between a well-organized library and a messy pile of books.

Companies like Netflix leverage data governance to power their recommendation engine, ensuring the data feeding their algorithms is high-quality and relevant. Similarly, global enterprises like Procter & Gamble rely on it to manage supply chain data, enabling them to operate efficiently across worldwide markets.

Actionable Implementation Tips

Getting started with data governance doesn't require a massive, immediate overhaul. A phased approach is often the most successful.

- Start Small: Begin by identifying your most critical data domains. For a marketing agency, this might be client project files and performance analytics. For a software company, it could be user data and source code. Focus on governing these high-value assets first.

- Engage Stakeholders: Involve business users from the start. The people who create and use the data every day, like your Mac-based design team or financial analysts, have the best understanding of its real-world context and challenges.

- Establish Clear Metrics: Define what success looks like. This could be a reduction in time spent searching for files, a decrease in data-related errors, or an improvement in compliance audit scores.

- Leverage Automation: Use tools to enforce your new policies. This can include file management software that automates naming conventions or access control systems that restrict permissions based on predefined roles.

2. Master Data Management (MDM)

Master Data Management (MDM) is a technology-enabled discipline focused on creating a single, authoritative “golden record” for an organization's most critical data assets. Where data governance sets the rules, MDM enforces them for core business entities like customers, products, suppliers, and locations. It works by consolidating, cleaning, and synchronizing this master data across disparate IT systems, eliminating the costly inconsistencies that arise from data silos.

For Mac-based teams, this addresses a common pain point: having multiple versions of a client's contact information or conflicting product SKUs across sales, marketing, and support applications. MDM creates a central, trusted source of truth that all systems can rely on. This ensures that when a designer pulls client details for a project brief or a finance professional prepares an invoice, they are working with the same accurate, up-to-date information.

Why It's a Top Practice

MDM is a critical data management best practice because it directly tackles data redundancy and inconsistency, which are major obstacles to operational efficiency, analytics, and customer experience. Without a master view, businesses operate with a fragmented understanding of their own operations. MDM provides the unified foundation necessary for reliable business intelligence and seamless cross-departmental workflows.

Global giants like Coca-Cola use MDM to maintain a consistent view of customers and products across more than 200 countries, enabling cohesive global marketing campaigns. Similarly, General Electric relies on product MDM to manage millions of complex industrial parts across its vast supply chain, ensuring engineering and manufacturing are perfectly aligned.

Actionable Implementation Tips

Implementing MDM is a significant undertaking, but a strategic, phased approach can lead to powerful results. Success hinges on careful planning and focusing on business value from the outset.

- Start with One Domain: Don’t try to boil the ocean. Begin with a single, high-impact data domain that causes the most pain, such as "customer" or "product" data. Proving value here will build momentum and secure buy-in for future expansion.

- Prioritize Data Quality: An MDM system is only as good as the data within it. Before implementation, invest in data cleansing and quality improvement initiatives. Use data profiling tools to identify and correct inconsistencies in your source systems first.

- Secure Executive Sponsorship: MDM is not just an IT project; it is a business transformation initiative. Gaining strong, visible sponsorship from executive leadership is essential to navigate organizational politics and secure the necessary resources.

- Plan for Change Management: Introducing a single source of truth often changes how people work. Develop a comprehensive change management plan to communicate the benefits, provide training, and manage the transition for teams who are used to their old data silos.

3. Data Quality Management

Data Quality Management is a systematic approach to ensuring that data is accurate, complete, consistent, and reliable across all systems. It's the process of continuously monitoring and improving data health to ensure it is fit for its intended purpose, whether that's for operational use, analytics, or strategic decision-making. This involves a combination of technology, processes, and people working together to identify, measure, and correct data flaws.

For Mac-based professionals, this translates to tangible benefits. It means avoiding the embarrassment of sending a client an invoice with the wrong name, ensuring marketing campaigns target the correct audience segment, and trusting that the financial data used for quarterly reports is free from errors. Poor data quality can undermine the most creative projects and sharpest business strategies, making its management a critical discipline.

Why It's a Top Practice

Prioritizing Data Quality Management is essential because low-quality data leads directly to flawed insights, operational inefficiencies, and poor business outcomes. It is a core component of effective data management best practices because without high-quality data, any analytics or business intelligence initiative is built on a shaky foundation. Trust in data is paramount, and this practice is how that trust is built and maintained.

Companies that excel at this see significant returns. Amazon's powerful recommendation engine and seamless user experience rely on meticulously managed product and customer data. Similarly, logistics giant UPS depends on high-quality address data to achieve its remarkable delivery accuracy and operational efficiency, preventing costly reroutes and delays.

Actionable Implementation Tips

Implementing a data quality program can be approached methodically without disrupting your entire workflow. The key is to be proactive rather than reactive.

- Define Quality Rules: Work with business teams to establish clear, measurable rules for what constitutes "good" data. For a design agency, a rule might be that all client project folders must contain a signed contract and a project brief before work commences.

- Check at the Source: The most effective place to catch errors is at the point of entry. Implement validation checks in your forms, databases, and applications to prevent bad data from ever entering your systems.

- Use Automation: Leverage tools to continuously monitor data quality. Solutions from providers like Informatica or Talend can automate the process of profiling data, identifying anomalies, and alerting the right people to fix them.

- Train Your Team: Educate your staff on the importance of data quality and their role in maintaining it. A creative team that understands why consistent file naming and metadata tagging are crucial is more likely to adhere to the standards.

4. Data Security and Privacy

Data Security and Privacy involves a comprehensive suite of technical, administrative, and physical safeguards designed to protect sensitive information. It's about building a fortress around your data assets, shielding them from unauthorized access, breaches, and regulatory non-compliance. This practice encompasses everything from encryption and access controls to privacy policies and risk management, ensuring data integrity and confidentiality across its lifecycle.

For Mac-based teams handling client contracts, proprietary creative work, or personal employee information, robust security isn't optional; it's a core business function. It means ensuring that a shared video project file is encrypted both in transit and at rest, and that only authorized personnel can access sensitive financial spreadsheets. Effective security measures transform data from a potential vulnerability into a trusted, protected asset.

Why It's a Top Practice

In an era of constant cyber threats and stringent privacy laws like GDPR and CCPA, prioritizing data security is non-negotiable. A single data breach can lead to devastating financial losses, reputational damage, and legal penalties. Strong security and privacy practices are fundamental to building and maintaining customer trust, which is a critical component of any successful data management best practices strategy.

Companies like Apple have built their brand on privacy, using techniques like differential privacy to analyze user data without compromising individual identities. Likewise, financial institutions adhere to strict PCI DSS compliance to protect payment data, demonstrating that security is a competitive differentiator. For a comprehensive understanding of protecting your organization's sensitive information, explore essential Data Security Best Practices.

Actionable Implementation Tips

Implementing a robust security posture can be approached systematically without disrupting workflows.

- Classify Your Data: Not all data is equally sensitive. Start by categorizing data into tiers like "Public," "Internal," and "Confidential." This allows you to apply the strongest protections, like end-to-end encryption, to your most critical assets.

- Implement Defense-in-Depth: Don’t rely on a single security measure. Layer your defenses by combining firewalls, access controls, encryption, and regular security audits. This "Zero Trust" approach, popularized by Microsoft, assumes no user or device is automatically trusted.

- Train Your Team: Human error remains a leading cause of data breaches. Conduct regular training on phishing awareness, strong password hygiene, and secure data handling protocols. Empower your employees to be the first line of defense.

- Regularly Test and Update: Security is not a one-time setup. Routinely test your security controls through penetration testing and vulnerability scans. Keep all software, especially on your macOS devices, updated to patch potential vulnerabilities. Enhancing user privacy through advanced technology is a key part of this ongoing effort; you can learn more about enhanced user privacy.

5. Data Lifecycle Management

Data Lifecycle Management (DLM) is a policy-based approach to managing data from its creation to its eventual destruction. This practice optimizes storage costs, system performance, and regulatory compliance by treating data not as a static entity but as an asset with a finite, valuable lifespan. DLM involves setting automated policies for migrating, archiving, and deleting data based on its changing business value and legal requirements over time.

For Mac-based creative teams and corporate professionals, this means establishing an automated flow for data. A new video project file, for example, might initially live on high-speed, expensive storage for active editing. After a month, it could automatically move to slower, more cost-effective cloud storage for review, and after a year, be archived to long-term cold storage or deleted entirely if it's no longer needed. This systematic approach prevents the costly and risky accumulation of unmanaged data.

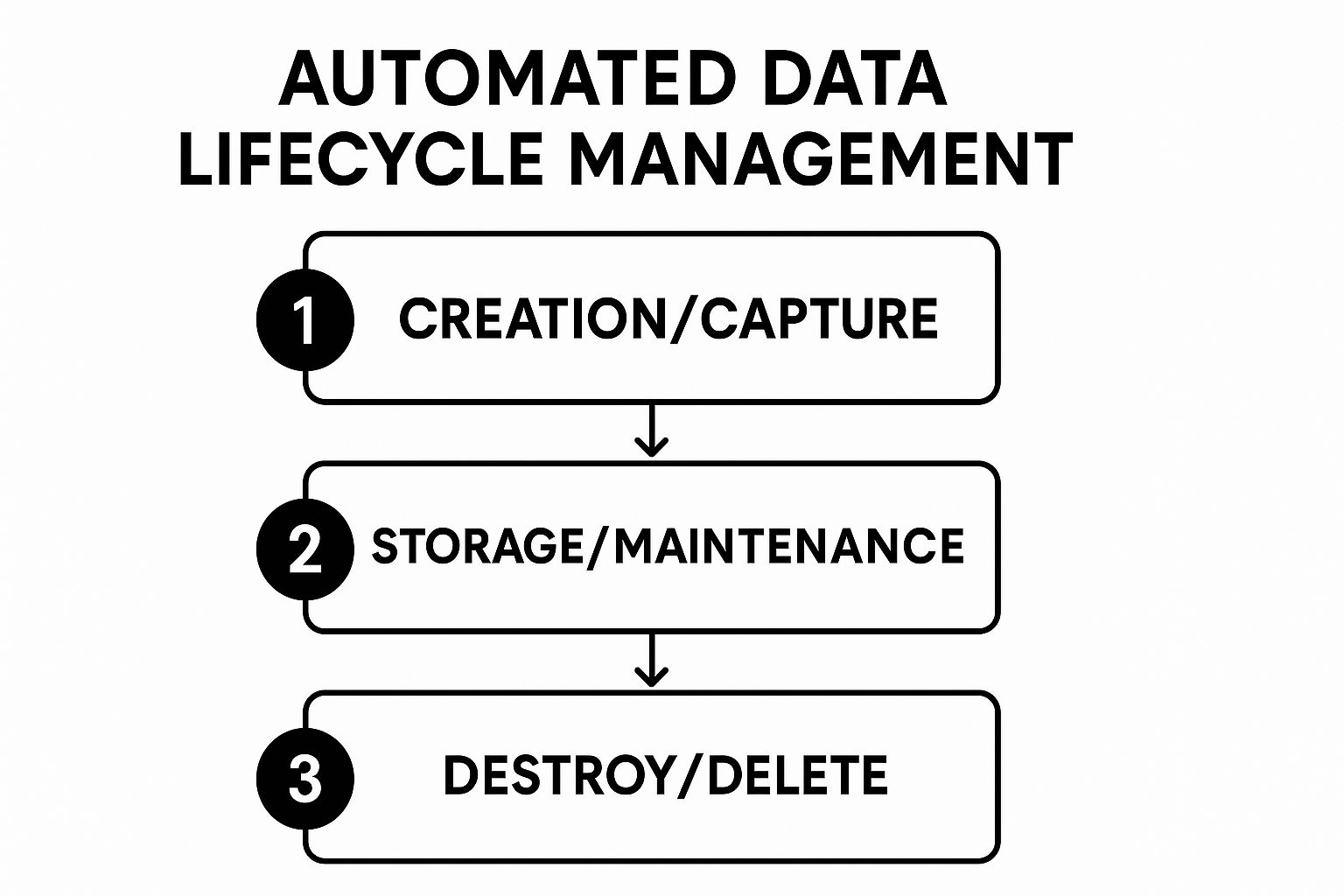

The following process flow infographic illustrates the three core stages of automated Data Lifecycle Management, showing how data moves from creation to its final disposition.

This visualization highlights the journey of data through distinct phases, each governed by specific policies to ensure it is handled efficiently and securely throughout its existence.

Why It's a Top Practice

Implementing DLM is one of the most effective data management best practices for controlling explosive data growth and its associated costs. It ensures that valuable storage resources are reserved for active, high-priority data while less critical data is tiered appropriately. This structured process is essential for meeting compliance mandates, such as GDPR or HIPAA, which specify how long certain types of data must be kept and when it must be destroyed.

Companies like Netflix manage petabytes of video content by moving files between different storage tiers based on viewership data, optimizing both cost and streaming performance. Similarly, financial institutions archive transaction records according to strict regulatory timelines, a process made manageable only through automated DLM. Solutions from IBM, NetApp, and Dell EMC have popularized this approach in the enterprise.

Actionable Implementation Tips

Adopting Data Lifecycle Management can start with a targeted focus and expand over time. A proactive strategy is key to avoiding storage overruns and compliance failures.

- Define Clear Retention Policies: Classify your data (e.g., project files, financial records, client communications) and define a specific lifecycle for each type. Specify how long it stays in active storage, when it gets archived, and when it should be deleted.

- Implement Automated Enforcement: Use storage management software or cloud services that support policy-based automation. This removes the burden of manual migration and deletion from your team, ensuring policies are applied consistently.

- Ensure Legal Hold Capabilities: Your DLM system must be able to suspend deletion policies for data subject to litigation or investigation. This legal hold capability is a non-negotiable compliance requirement.

- Regularly Review and Update: Business needs and regulations change. Review your DLM policies at least annually to ensure they still align with your operational goals and legal obligations.

6. Data Integration and Interoperability

Data Integration and Interoperability refer to the process of combining data from various disparate sources, formats, and systems into a unified, consistent view. This practice enables seamless data flow across an entire organization, breaking down information silos. It involves technologies like ETL (Extract, Transform, Load), API management, and real-time data streaming to ensure that data, regardless of its origin, can be accessed and analyzed cohesively.

For creative agencies or corporate departments using Macs, this means connecting project management tools like Asana, financial software, and cloud storage into a single source of truth. Instead of manually collating data from different platforms for a client report, integration automates the flow of information. This transforms isolated data points from various applications into a powerful, interconnected ecosystem, enabling smarter workflows and deeper business insights.

Why It's a Top Practice

Data integration is a critical component of modern data management best practices because it unlocks the collective value of an organization's data. Without it, valuable information remains trapped in isolated applications, leading to incomplete analyses and inefficient operations. It's the technical backbone that allows a business to operate as a cohesive unit rather than a collection of disconnected parts.

Tech giants exemplify its power. Spotify processes billions of daily events through a real-time data pipeline to personalize user experiences, while Airbnb integrates data from listings, bookings, and user reviews to manage its global marketplace. Similarly, Tesla integrates vast amounts of vehicle sensor data to refine its autonomous driving algorithms.

Actionable Implementation Tips

Implementing a successful data integration strategy requires careful planning and a focused approach. It’s about building bridges between systems in a smart, scalable way.

- Start with High-Value Use Cases: Don't try to connect everything at once. Identify a critical business process that suffers from data silos, such as customer journey tracking or supply chain visibility, and focus your initial integration efforts there.

- Standardize Formats: Use standardized data formats like JSON or Parquet and common protocols to simplify communication between systems. This reduces the complexity of data transformation and makes the integration more robust.

- Establish Data Lineage: Document how data flows from its source to its destination, including all transformations. This is crucial for troubleshooting, ensuring data quality, and meeting compliance requirements.

- Plan for Scale: Design your integration architecture to handle future growth in data volume and velocity. Leveraging cloud-based platforms like Snowflake or Azure Data Factory can provide the necessary scalability and performance. To delve deeper into optimizing data pipelines and ensuring seamless data flow, explore our guide on data integration best practices.

7. Data Backup and Disaster Recovery

A Data Backup and Disaster Recovery (DR) plan is a critical safety net that protects an organization's most valuable asset: its data. This practice involves creating systematic copies of data to restore in case of loss, corruption, or system failure. It goes beyond simple backups by also establishing a documented plan to recover IT infrastructure and resume business operations after a catastrophic event, ensuring business continuity.

For Mac-based professionals, this means having a strategy that accounts for everything from a crashed hard drive wiping out a project portfolio to a natural disaster impacting an office. A solid backup and DR plan ensures that your critical files, from client deliverables to financial records, are not only preserved but can be restored quickly to minimize downtime and financial loss. It is a fundamental component of resilient data management best practices.

Why It's a Top Practice

Implementing a robust backup and DR strategy is non-negotiable because data loss is not a matter of if, but when. Hardware failure, cyberattacks, human error, and natural disasters are all real threats. Without a tested recovery plan, a company can face devastating consequences, including prolonged downtime, reputational damage, and permanent loss of irreplaceable information.

Global giants like Netflix exemplify this with a multi-region backup strategy that ensures their service remains available worldwide even if one data center goes down. Similarly, financial institutions rely on sophisticated DR plans to protect trading systems, where even seconds of downtime can result in millions in losses. This level of preparedness is what separates a minor inconvenience from a business-ending catastrophe.

Actionable Implementation Tips

Building an effective backup and DR plan requires a structured, proactive approach. To ensure data availability and recoverability, establishing and adhering to best practices for secure data backup is non-negotiable.

- Follow the 3-2-1 Rule: Maintain at least three copies of your data on two different types of media, with at least one copy stored offsite. For Mac users, this could be your main drive, a Time Machine backup on an external disk, and a cloud backup.

- Automate and Test: Automate your backup processes using reliable software to eliminate human error. Crucially, regularly test your backups by performing trial restores to confirm the data is intact and the recovery process works as expected.

- Document Everything: Create a clear, step-by-step disaster recovery document. This guide should detail who is responsible for what, the exact procedures to follow, and contact information for key personnel and vendors.

- Compress for Efficiency: Before backing up large files, such as video projects or design assets, use compression tools. This reduces storage costs and dramatically speeds up transfer times, making offsite and cloud backups more efficient. Tools like Compresto offer advanced solutions for managing large files, making backups faster and more manageable.

8. Data Documentation and Metadata Management

Effective data documentation and metadata management involve the systematic creation and upkeep of information about your data assets. This isn't just about noting a file's creation date; it’s about capturing its structure, meaning, lineage, and purpose. This practice transforms raw data into a discoverable and understandable resource by creating business glossaries, data dictionaries, and tracking data lineage.

For Mac-based teams, this means the difference between a folder full of ambiguously named "Final_Draft_v3.psd" files and a well-organized system where anyone can quickly understand a file's context, its relationship to other project assets, and its intended use. Strong documentation ensures that when a new designer joins the team, they can get up to speed without needing to ask what every single asset is, significantly boosting productivity and one of the most vital data management best practices.

Why It's a Top Practice

Data without context is just noise. Documentation and metadata management provide that crucial context, making data trustworthy and accessible. It powers everything from regulatory compliance to efficient analytics. When your data is well-documented, you democratize its use, allowing more team members to leverage it for insights without relying on a small group of experts who hold all the institutional knowledge.

Tech giants have built entire ecosystems around this concept. LinkedIn's internal data catalog, DataHub, serves thousands of engineers and data scientists, allowing them to discover and understand data across the company. Similarly, Airbnb’s investment in a centralized metadata platform was key to enabling its teams to find and trust the data needed to make business decisions.

Actionable Implementation Tips

Implementing robust documentation doesn't have to be a manual, tedious process. Modern tools and a smart strategy can make it seamless.

- Automate Metadata Capture: Use tools that automatically extract technical metadata from your files and systems. For creative teams, software like Adobe Bridge or specialized Digital Asset Management (DAM) systems can capture metadata like camera settings, software versions, and color profiles automatically.

- Encourage Collaborative Documentation: Data documentation is a team sport. Use wiki-based platforms or tools integrated into your workflow that allow the users who know the data best to contribute their knowledge easily.

- Integrate with Existing Workflows: Don't create a separate, isolated documentation process. Integrate metadata tagging and documentation steps directly into your existing project management or development workflows to ensure it becomes a natural habit.

- Audit for Accuracy: Regularly review your documentation to ensure it remains accurate and complete. Outdated documentation can be more misleading than no documentation at all. Set quarterly reminders to audit key data assets.

8-Point Data Management Best Practices Comparison

| Item | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Data Governance Framework | High: policy and organizational setup | Significant initial and ongoing effort | Improved data quality, compliance, risk reduction | Enterprise-wide data management, compliance enforcement | Trusted data, operational efficiency, risk mitigation |

| Master Data Management (MDM) | High: technical integration & governance needed | High upfront software and services cost | Single source of truth, reduced duplicates, better analytics | Core entity data consistency across systems | Eliminates data silos, improves customer experience |

| Data Quality Management | Medium: needs rules and tools deployment | Ongoing investment in monitoring tools | Enhanced data accuracy, compliance, and trust | Continuous data accuracy improvement across systems | Better decision-making, error reduction |

| Data Security and Privacy | High: specialized security expertise required | Continuous monitoring & security tools | Data protection, regulatory compliance, reduced breach risks | Protection of sensitive data, regulatory environments | Customer trust, risk mitigation, compliance assurance |

| Data Lifecycle Management | Medium-High: policy configuration & automation | Ongoing monitoring & storage resources | Optimized storage, compliance, improved performance | Managing data aging, cost, and compliance lifecycle stages | Cost savings, performance improvements |

| Data Integration & Interoperability | High: complex across heterogeneous systems | High computational and network demand | Unified data views, enhanced analytics and real-time insights | Enterprise-wide data flow and analytics | Reduces silos, supports digital transformation |

| Data Backup & Disaster Recovery | Medium: infrastructure and procedural setup | Significant storage and network resources | Data loss protection, business continuity | Disaster preparedness, regulatory compliance | Rapid recovery, regulatory compliance |

| Data Documentation & Metadata Management | Medium: setup & ongoing updates required | Moderate effort in automation and collaboration | Improved discoverability, compliance, impact analysis | Data literacy, governance, onboarding | Faster data search, governance support |

Final Thoughts

Navigating the complex landscape of digital information requires more than just good intentions; it demands a structured, strategic approach. Throughout this guide, we've explored the foundational pillars of effective data management, moving from high-level governance frameworks to the granular, everyday practices that protect and optimize your most valuable digital assets. The journey from data chaos to data clarity is not instantaneous, but it is achievable by methodically implementing these core principles.

The essence of strong data management best practices is not about adopting a rigid, one-size-fits-all system. Instead, it's about creating a resilient, adaptable ecosystem for your data. This ecosystem should be built on a clear understanding of your information's entire lifecycle, from its creation to its eventual archival or secure deletion. By establishing robust governance, you create the rules of the road, ensuring everyone in your organization understands their role and responsibilities.

From Theory to Action: Your Next Steps

Merely understanding these concepts is the first step. The real transformation happens when you put them into practice. The most successful professionals, whether in a corporate setting or a creative studio, treat data management not as a background task but as an active, ongoing discipline. They recognize that well-managed data is the bedrock of efficiency, security, and innovation.

To begin your journey, consider these actionable starting points:

- Perform a Data Audit: You can't manage what you don't know you have. Start by mapping out your key data assets. Identify where they are stored, who has access, and how they are currently being used. This initial audit will reveal your biggest vulnerabilities and opportunities for improvement.

- Prioritize One Key Area: Don't try to boil the ocean. Select one practice from this guide to master first. For many, implementing a robust Data Backup and Disaster Recovery plan provides the most immediate peace of mind and tangible value. For others, focusing on Data Quality Management might be the key to unlocking better business insights.

- Empower Your Team: Data management is a team sport. Foster a culture of data literacy by providing training and clear documentation. When your entire team understands the "why" behind practices like metadata management and security protocols, compliance becomes a shared goal rather than a mandate.

The Lasting Impact of Data Mastery

Ultimately, mastering these data management best practices is an investment in your future. For the corporate professional, it translates to streamlined workflows, enhanced decision-making, and mitigated security risks. For the Mac-based content creator, it means protecting your creative output, optimizing storage, and ensuring seamless collaboration without the fear of data loss or corruption.

Think of each practice as a gear in a larger machine. A solid governance framework sets the machine in motion. Master data management ensures the gears mesh perfectly. Security protocols act as the protective casing, while a well-oiled backup strategy is your emergency power source. When all components work in harmony, the result is a powerful engine for productivity and growth. The path forward is clear: treat your data with the discipline and respect it deserves, and it will become your most powerful asset.

Ready to take control of a crucial piece of your data management puzzle? The best practices for file organization and storage optimization are made simple with Compresto. Our advanced compression tool for Mac helps you reduce file sizes without sacrificing quality, making your backup, storage, and sharing workflows faster and more efficient. Try Compresto today and see how effortless powerful file management can be.